simlr_interpretation

simlr_interpretation.RmdSimilarity-driven multi-view linear reconstruction

Introduction

Modern science generates data from multiple sources (e.g., genetics, imaging, behavior), yet integrating these ‘views’ to find a single, coherent underlying signal is a major statistical challenge. Similarity-driven multi-view linear reconstruction (SiMLR) addresses this by exploiting inter-modality relationships to transform large scientific datasets into a smaller joint space. The link between the original data () and the reduced embedding space is a sparse set of features ( ). Standard statistical tools may then be applied on the embeddings .

SiMLR may be used in a variety of other ways. We will cover a basic example and follow with different use cases. These examples are perhaps less involved than those given in the original publication’s cloud computing examples but illustrate new functionality.

Example: Applying SiMLR to Simulated Multi-View Data

In this section, we explore how SiMLR can be applied to multi-view datasets. We’ll generate synthetic data to simulate the application of SiMLR, compare the results to traditional methods like Singular Value Decomposition (SVD), and visualize the relationships between the views. This example derives from the simulation-based evaluation in the original paper.

Step 1: Simulate Multi-View Data

We begin by simulating three different views of data, each representing different modalities or datasets that share some underlying structure.

library(forcats)

library(ANTsR)

#> Warning: replacing previous import 'stats::filter' by 'dplyr::filter' when

#> loading 'ANTsR'

#> ANTsR 0.6.4

#>

#> Attaching package: 'ANTsR'

#> The following objects are masked from 'package:stats':

#>

#> sd, step, var

#> The following objects are masked from 'package:base':

#>

#> all, any, apply, max, min, prod, range, sum

library(reshape2)

set.seed(1500)

nsub <- 100 # Number of subjects/samples

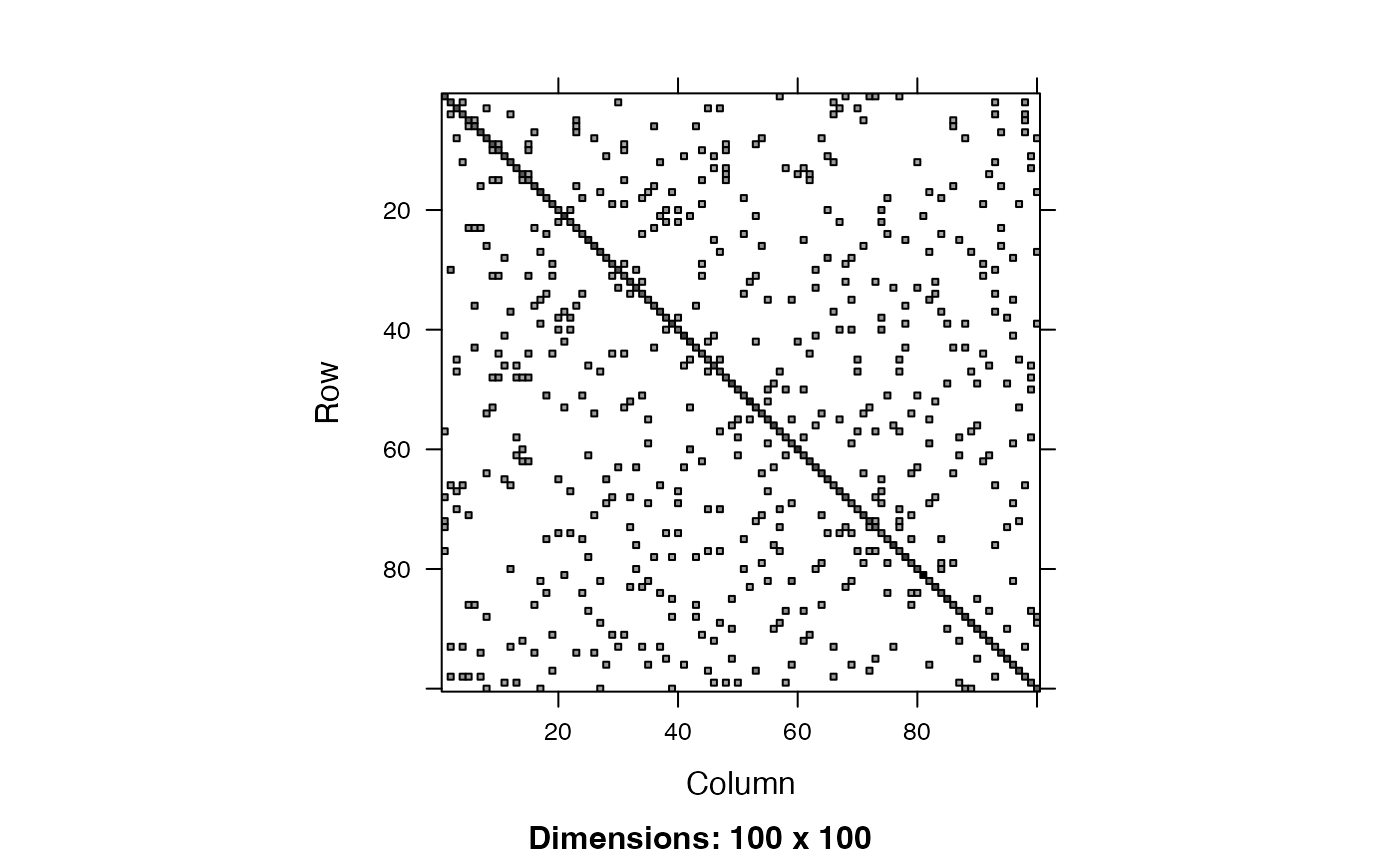

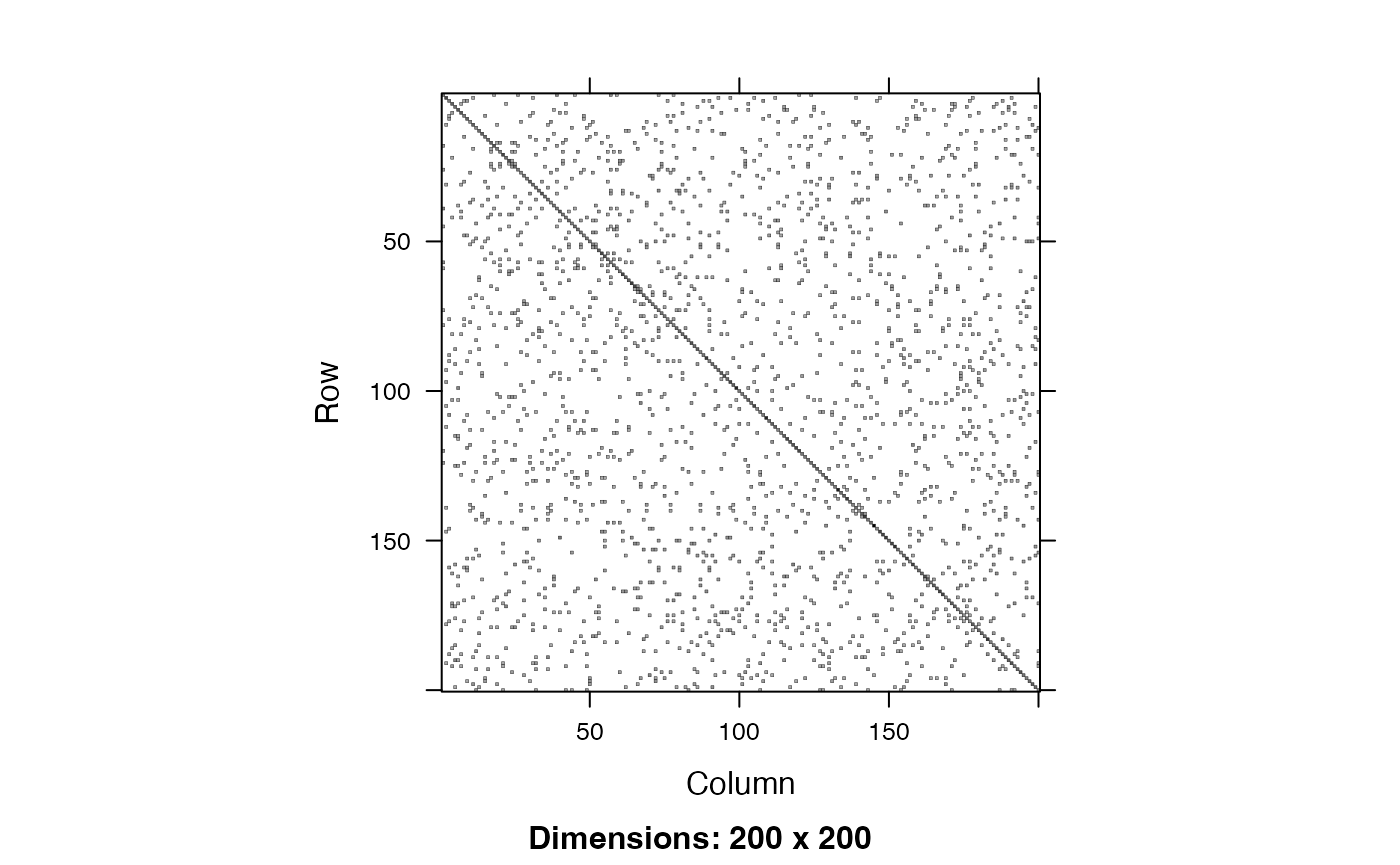

npix <- c(100, 200, 133) # Number of features in each view

nk <- 5 # Number of latent factors

# Generating outcome matrices for each view

outcome <- matrix(rnorm(nsub * nk), ncol = nk)

outcome1 <- matrix(rnorm(nsub * nk), ncol = nk)

outcome2 <- matrix(rnorm(nsub * nk), ncol = nk)

outcome3 <- matrix(rnorm(nsub * nk), ncol = nk)

# Generating transformation matrices for each view

view1tx <- matrix(rnorm(npix[1] * nk), nrow = nk)

view2tx <- matrix(rnorm(npix[2] * nk), nrow = nk)

view3tx <- matrix(rnorm(npix[3] * nk), nrow = nk)

# Creating the multi-view data matrices

mat1 <- (outcome %*% t(outcome1) %*% (outcome1)) %*% view1tx

mat2 <- (outcome %*% t(outcome2) %*% (outcome2)) %*% view2tx

mat3 <- (outcome %*% t(outcome3) %*% (outcome3)) %*% view3tx

colnames(mat1)=paste0("m1.",1:ncol(mat1))

colnames(mat2)=paste0("m2.",1:ncol(mat2))

colnames(mat3)=paste0("m3.",1:ncol(mat3))

# Combine the matrices into a list

matlist <- list(m1 = mat1, m2 = mat2, m3 = mat3)

gsmd = generate_structured_multiview_data( nsub, npix,

k_shared=nk, k_specific=1, seed=0 )

matlist = gsmd$data_list

mat1 = gsmd$data_list[[1]]

mat2 = gsmd$data_list[[2]]

mat3 = gsmd$data_list[[3]]

outcome = gsmd$ground_truth[[1]]

outcome1 = gsmd$ground_truth[[2]]$View1In this code, mat1, mat2, and

mat3 represent three views of the data. Each view is

constructed to share a common underlying structure but with different

transformations applied.

A more complex data generation scheme is available in

generate_structured_multiview_data.

Step 2: Apply SiMLR

Now we apply the SiMLR algorithm to the simulated multi-view data to find a common representation across the views.

# Applying SiMLR

prepro=c( "centerAndScale","np")

constr='orthox0.02x1' # good default

result <- simlr(matlist,constraint=constr,scale=prepro,

mixAlg='pca', energyType='acc', # recommended

verbose=TRUE)

#>

#> --- Method Summary ---

#> • Mixer Algorithm : pca

#> • Energy Type : acc

#> • Sparseness Alg. : soft

#> • expBeta : 0

#> • constraint : ortho

#> • constraint-it : 1

#> • constraint-wt : 0.02

#> • optimizationStyle: bidirectional_lookahead

#> • domain-knowledge : 0

#> ----------------------

#>

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Setting adaptive orthogonality/energy weights based on first few iterations...

#> [1] "Setting adaptive orthogonality/energy weights based on first few iterations..."

#> Domain Weights: 1, 1, 1

#> View1 View2 View3

#> 0.9329915 0.8870375 0.7966900

#> Norm Weights: 0.933, 0.887, 0.797

#> Orth Weights: 0, 0, 0

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> It: 2 | Energy: -0.3344 | Best.Energy: -0.3344 (at iter 2) | Ortho: 0.0000

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> It: 3 | Energy: -0.5193 | Best.Energy: -0.5193 (at iter 3) | Ortho: 0.0000

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> It: 8 | Energy: -0.5621 | Best.Energy: -0.5621 (at iter 8) | Ortho: 0.0000

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> It: 10 | Energy: -0.6426 | Best.Energy: -0.6426 (at iter 10) | Ortho: 0.0000

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> It: 12 | Energy: -0.6976 | Best.Energy: -0.6976 (at iter 12) | Ortho: 0.0000

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> It: 17 | Energy: -0.7498 | Best.Energy: -0.7498 (at iter 17) | Ortho: 0.0000

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> ~~Convergence criteria met @ 68

#> No improvement over 10% of max iterations 50

#> ~~Converged at 16 iterations.

# Projecting data into the reduced space

p1 <- mat1 %*% (result$v[[1]])

p2 <- mat2 %*% (result$v[[2]])

p3 <- mat3 %*% (result$v[[3]])

# pheatmap::pheatmap( result$v[[1]], cluster_cols=F, cluster_rows=F )SiMLR identifies a sparse set of features that best reconstruct each

view, resulting in projections p1, p2, and

p3 for each view.

Step 3: Visualization and Comparison

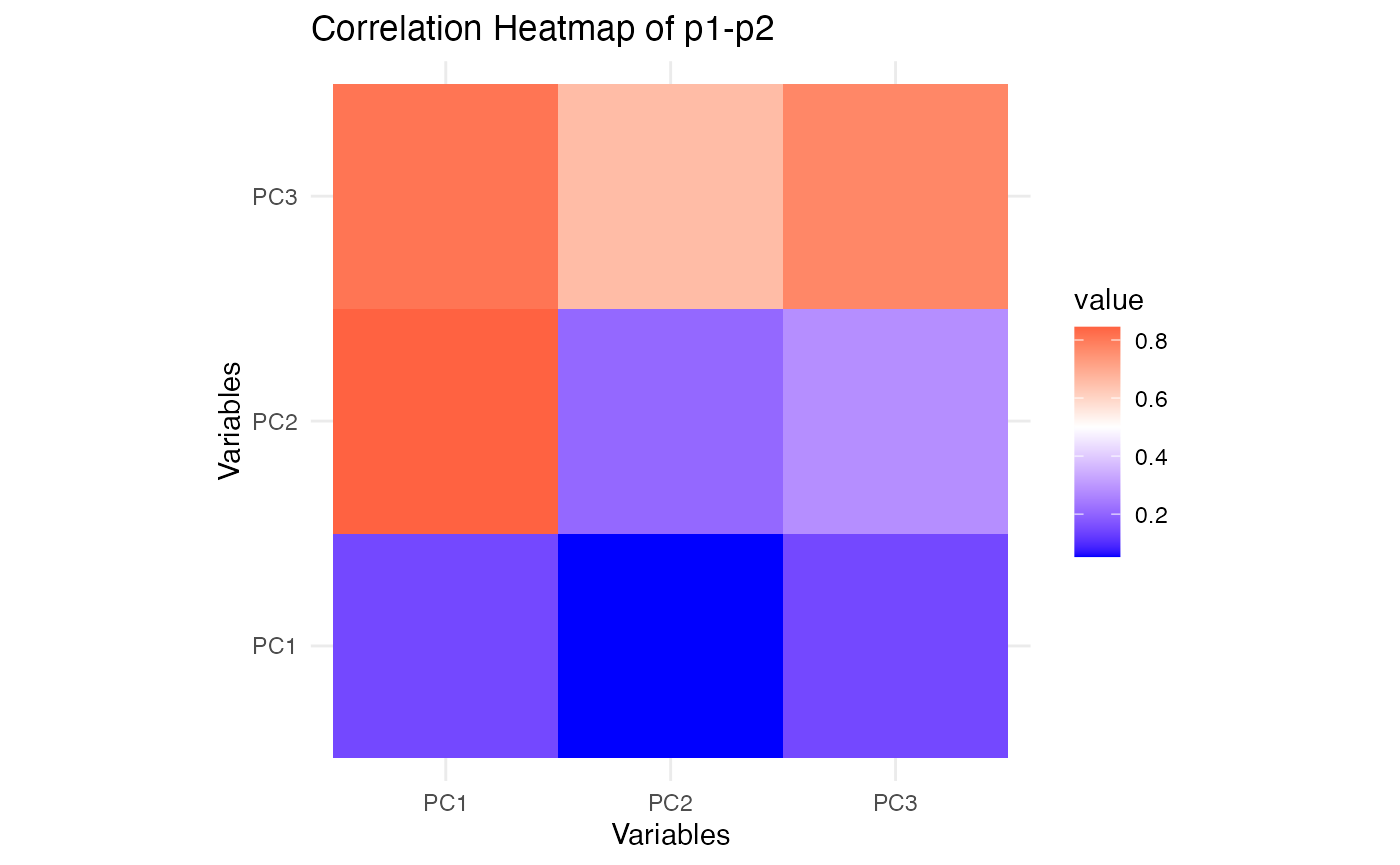

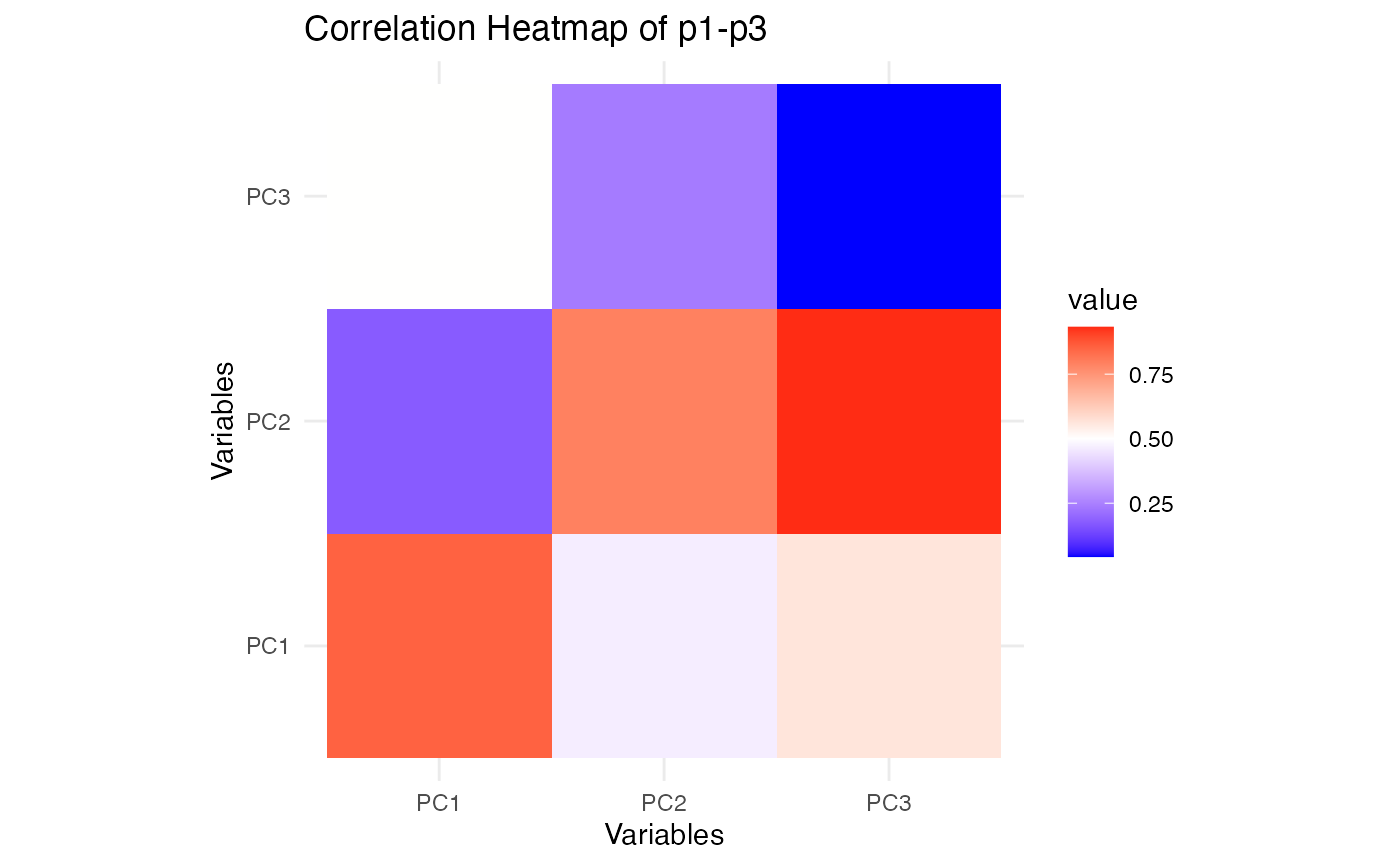

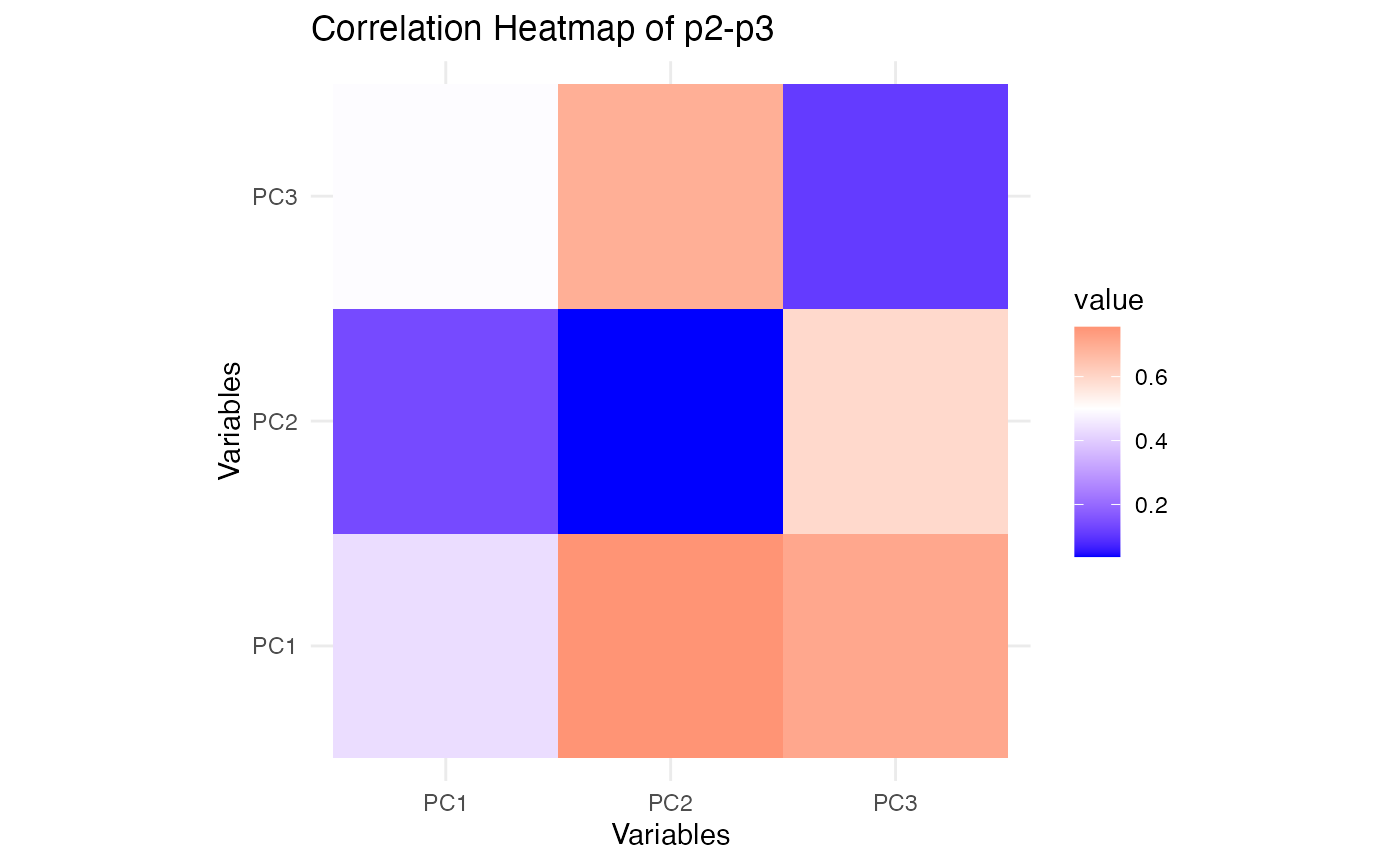

To understand the effectiveness of the SiMLR projections, we can compare the correlations between the projections of different views. Additionally, we’ll compare the results to those obtained via Singular Value Decomposition (SVD).

# Calculate SVD for comparison

svd1 <- svd(mat1, nu = nk, nv = 0)$u

svd2 <- svd(mat2, nu = nk, nv = 0)$u

svd3 <- svd(mat3, nu = nk, nv = 0)$u

# Calculate correlations between the SiMLR projections

cor_p1_p2 <- range(cor(p1, p2))

cor_p1_p3 <- range(cor(p1, p3))

cor_p2_p3 <- range(cor(p2, p3))

# Compare with correlations from SVD

cor_svd1_svd2 <- range(cor(svd1, svd2))

# Print the results

cat("Correlation between p1 and p2:", cor_p1_p2, "\n")

#> Correlation between p1 and p2: -0.7029881 0.8777331

cat("Correlation between p1 and p3:", cor_p1_p3, "\n")

#> Correlation between p1 and p3: -0.53927 0.8316805

cat("Correlation between p2 and p3:", cor_p2_p3, "\n")

#> Correlation between p2 and p3: -0.3277606 0.8645039

cat("Correlation between SVD1 and SVD2:", cor_svd1_svd2, "\n")

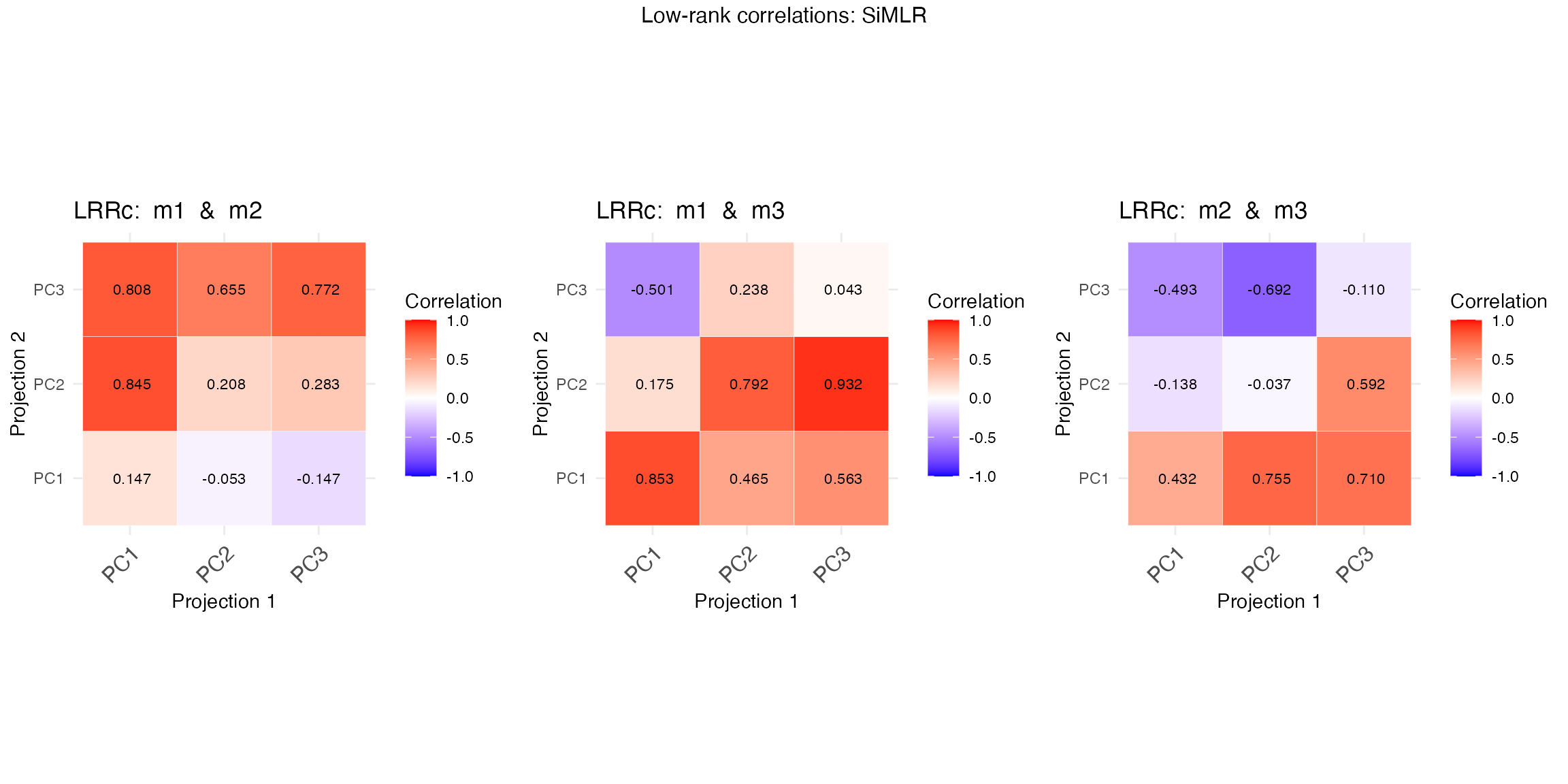

#> Correlation between SVD1 and SVD2: -0.7306001 0.6777956Visualizing Correlations

Visualizing these correlations helps us understand the similarity between the views after the SiMLR transformation.

library(ggplot2)

# Function to plot correlation heatmaps

plot_cor_heatmap <- function(mat1, mat2, title) {

cor_mat <- abs(cor(mat1,mat2))

ggplot(melt(cor_mat), aes(Var1, Var2, fill = value)) +

geom_tile() +

scale_fill_gradient2(midpoint = 0.5, low = "blue", high = "red", mid = "white") +

theme_minimal() +

labs(title = title, x = "Variables", y = "Variables") +

coord_fixed()

}

# Plotting the correlations

plot_cor_heatmap(p1, p2, "Correlation Heatmap of p1-p2")

plot_cor_heatmap(p1, p3,"Correlation Heatmap of p1-p3")

plot_cor_heatmap(p2, p3, "Correlation Heatmap of p2-p3")

These heatmaps show how the reduced representations from SiMLR correlate within each view. Comparing these with similar plots for SVD will reveal whether SiMLR captures more meaningful relationships between the views.

Step 4: Permutation Test

A permutation test can assess whether the observed correlations are significant.

s1 <- sample(1:nsub)

s2 <- sample(1:nsub)

permMats=list(vox = mat1, vox2 = mat2[s1, ], vox3 = mat3[s2, ])

resultp <- simlr(permMats,constraint=constr,scale=prepro,

mixAlg='pca', energyType='acc', # recommended

verbose=TRUE)

#>

#> --- Method Summary ---

#> • Mixer Algorithm : pca

#> • Energy Type : acc

#> • Sparseness Alg. : soft

#> • expBeta : 0

#> • constraint : ortho

#> • constraint-it : 1

#> • constraint-wt : 0.02

#> • optimizationStyle: bidirectional_lookahead

#> • domain-knowledge : 0

#> ----------------------

#>

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Setting adaptive orthogonality/energy weights based on first few iterations...

#> [1] "Setting adaptive orthogonality/energy weights based on first few iterations..."

#> Domain Weights: 1, 1, 1

#> vox vox2 vox3

#> 3.072983 1.177631 8.308016

#> Norm Weights: 3.073, 1.178, 8.308

#> Orth Weights: 0, 0, 0

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> It: 2 | Energy: -0.3439 | Best.Energy: -0.3439 (at iter 2) | Ortho: 0.0000

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> It: 3 | Energy: -0.6163 | Best.Energy: -0.6163 (at iter 3) | Ortho: 0.0000

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> It: 4 | Energy: -1.1187 | Best.Energy: -1.1187 (at iter 4) | Ortho: 0.0000

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> It: 8 | Energy: -1.2509 | Best.Energy: -1.2509 (at iter 8) | Ortho: 0.0000

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> It: 12 | Energy: -1.4077 | Best.Energy: -1.4077 (at iter 12) | Ortho: 0.0000

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> It: 32 | Energy: -1.5345 | Best.Energy: -1.5345 (at iter 32) | Ortho: 0.0000

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> It: 48 | Energy: -1.7944 | Best.Energy: -1.7944 (at iter 48) | Ortho: 0.0000

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> It: 57 | Energy: -1.8224 | Best.Energy: -1.8224 (at iter 57) | Ortho: 0.0000

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .bidirectional_linesearch(V_current = V_current, descent_direction =

#> search_direction, : Bidirectional line search failed to find a suitable step

#> size in either direction.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .bidirectional_linesearch(V_current = V_current, descent_direction =

#> search_direction, : Bidirectional line search failed to find a suitable step

#> size in either direction.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> It: 83 | Energy: -1.8894 | Best.Energy: -1.8894 (at iter 83) | Ortho: 0.0000

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> Warning in .robust_backtracking_linesearch(V_current = V_current,

#> descent_direction = -descent_direction, : Robust line search failed to find a

#> suitable step size; possibly at local minimum or numerical issue.

#> ~~Convergence criteria met @ 134

#> No improvement over 10% of max iterations 50

#> ~~Converged at 82 iterations.

p1p <- mat1 %*% (resultp$v[[1]])

p2p <- mat2[s1, ] %*% (resultp$v[[2]])

p3p <- mat3[s2, ] %*% (resultp$v[[3]])

# Compare the permuted correlations

cor_p1p_p2p <- range(cor(p1p, p2p))

cor_p1p_p3p <- range(cor(p1p, p3p))

cor_p2p_p3p <- range(cor(p2p, p3p))

# Print permuted results

cat("Permuted Correlation between p1p and p2p:", cor_p1p_p2p, "\n")

#> Permuted Correlation between p1p and p2p: -0.4449884 0.4524357

cat("Permuted Correlation between p1p and p3p:", cor_p1p_p3p, "\n")

#> Permuted Correlation between p1p and p3p: -0.4693403 0.4408695

cat("Permuted Correlation between p2p and p3p:", cor_p2p_p3p, "\n")

#> Permuted Correlation between p2p and p3p: -0.5197852 0.4801599The permutation test will show if the observed correlations are stronger than those expected by chance.

This introductory example demonstrates how SiMLR can uncover the shared structure across multiple views of data. The correlations between the projections indicate the degree to which the algorithm has captured this shared structure, and the permutation test serves as a validation step.

Integration of Multiple Data Types via Dimensionality Reduction

SiMLR is particularly useful when working with datasets from different modalities (e.g., genomics, proteomics, and imaging data). By finding a joint embedding space, SiMLR can integrate these diverse data types into a unified representation, facilitating downstream analyses such as clustering, classification, or regression.

When dealing with high-dimensional data, dimensionality reduction techniques are often required to make the data more manageable and to avoid issues like the curse of dimensionality. SiMLR provides an approach that not only reduces dimensionality but also maintains the relationships between different views of the data, making it a powerful tool for exploratory data analysis and visualization. Below, we illustrate reading, writing and exploratory integrated visualization.

library(fpc)

library(cluster)

library(gridExtra)

library(ggpubr)

sim2nm=tempfile()

write_simlr_data_frames( result$v, sim2nm )

simres2=read_simlr_data_frames( sim2nm, names(matlist) )

popdf = data.frame( age = outcome, cog=outcome1, mat1, mat2, mat3 )

temp2=apply_simlr_matrices( popdf, simres2, center=TRUE, scale=TRUE )

simnames=temp2[[2]]

zz=exploratory_visualization( temp2[[1]][,simnames[1:4]], dotsne=FALSE )

print( zz$plot )

Now we can icorporate the “modalities” in order to predict the simulated outcome matrix. Note that contributions exist from each modality in the regression (in some cases). This is one of the advantages of SiMLR: it provides systematic guidance for building these types of integrative models.

# multi-view regression

summary( lm( age.1 ~ View1PC1 + View2PC1 + View3PC1, data=temp2[[1]] ))

#>

#> Call:

#> lm(formula = age.1 ~ View1PC1 + View2PC1 + View3PC1, data = temp2[[1]])

#>

#> Residuals:

#> Min 1Q Median 3Q Max

#> -0.209548 -0.055564 -0.005481 0.064010 0.233397

#>

#> Coefficients:

#> Estimate Std. Error t value Pr(>|t|)

#> (Intercept) -0.002580 0.008185 -0.315 0.75326

#> View1PC1 -0.107701 0.039207 -2.747 0.00718 **

#> View2PC1 -0.327959 0.052230 -6.279 9.87e-09 ***

#> View3PC1 0.269772 0.065353 4.128 7.80e-05 ***

#> ---

#> Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

#>

#> Residual standard error: 0.08185 on 96 degrees of freedom

#> Multiple R-squared: 0.3564, Adjusted R-squared: 0.3363

#> F-statistic: 17.72 on 3 and 96 DF, p-value: 3.113e-09

summary( lm( age.2 ~ View1PC1 + View2PC1 + View3PC1, data=temp2[[1]] ))

#>

#> Call:

#> lm(formula = age.2 ~ View1PC1 + View2PC1 + View3PC1, data = temp2[[1]])

#>

#> Residuals:

#> Min 1Q Median 3Q Max

#> -0.14289 -0.05913 0.00078 0.04666 0.19685

#>

#> Coefficients:

#> Estimate Std. Error t value Pr(>|t|)

#> (Intercept) -0.005101 0.007391 -0.690 0.492

#> View1PC1 0.170772 0.035406 4.823 5.30e-06 ***

#> View2PC1 -0.219208 0.047166 -4.648 1.07e-05 ***

#> View3PC1 -0.042624 0.059016 -0.722 0.472

#> ---

#> Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

#>

#> Residual standard error: 0.07391 on 96 degrees of freedom

#> Multiple R-squared: 0.4742, Adjusted R-squared: 0.4577

#> F-statistic: 28.85 on 3 and 96 DF, p-value: 2.19e-13

summary( lm( age.3 ~ View1PC1 + View2PC1 + View3PC1, data=temp2[[1]] ))

#>

#> Call:

#> lm(formula = age.3 ~ View1PC1 + View2PC1 + View3PC1, data = temp2[[1]])

#>

#> Residuals:

#> Min 1Q Median 3Q Max

#> -0.276583 -0.049161 0.005662 0.051539 0.233027

#>

#> Coefficients:

#> Estimate Std. Error t value Pr(>|t|)

#> (Intercept) -0.006146 0.008204 -0.749 0.456

#> View1PC1 0.243021 0.039297 6.184 1.52e-08 ***

#> View2PC1 0.035423 0.052350 0.677 0.500

#> View3PC1 -0.301835 0.065503 -4.608 1.25e-05 ***

#> ---

#> Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

#>

#> Residual standard error: 0.08204 on 96 degrees of freedom

#> Multiple R-squared: 0.3514, Adjusted R-squared: 0.3312

#> F-statistic: 17.34 on 3 and 96 DF, p-value: 4.479e-09

summary( lm( age.4 ~ View1PC1 + View2PC1 + View3PC1, data=temp2[[1]] ))

#>

#> Call:

#> lm(formula = age.4 ~ View1PC1 + View2PC1 + View3PC1, data = temp2[[1]])

#>

#> Residuals:

#> Min 1Q Median 3Q Max

#> -0.130174 -0.029903 0.003014 0.027864 0.107905

#>

#> Coefficients:

#> Estimate Std. Error t value Pr(>|t|)

#> (Intercept) 0.0002726 0.0045419 0.060 0.95226

#> View1PC1 -0.1382882 0.0217562 -6.356 6.94e-09 ***

#> View2PC1 0.0369849 0.0289825 1.276 0.20500

#> View3PC1 -0.1187168 0.0362646 -3.274 0.00148 **

#> ---

#> Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

#>

#> Residual standard error: 0.04542 on 96 degrees of freedom

#> Multiple R-squared: 0.802, Adjusted R-squared: 0.7958

#> F-statistic: 129.6 on 3 and 96 DF, p-value: < 2.2e-16

summary( lm( age.5 ~ View1PC1 + View2PC1 + View3PC1, data=temp2[[1]] ))

#>

#> Call:

#> lm(formula = age.5 ~ View1PC1 + View2PC1 + View3PC1, data = temp2[[1]])

#>

#> Residuals:

#> Min 1Q Median 3Q Max

#> -0.183167 -0.062915 0.002871 0.052293 0.233107

#>

#> Coefficients:

#> Estimate Std. Error t value Pr(>|t|)

#> (Intercept) 0.005220 0.009028 0.578 0.5645

#> View1PC1 0.074145 0.043246 1.715 0.0897 .

#> View2PC1 -0.185996 0.057610 -3.229 0.0017 **

#> View3PC1 0.132766 0.072084 1.842 0.0686 .

#> ---

#> Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

#>

#> Residual standard error: 0.09028 on 96 degrees of freedom

#> Multiple R-squared: 0.2154, Adjusted R-squared: 0.1909

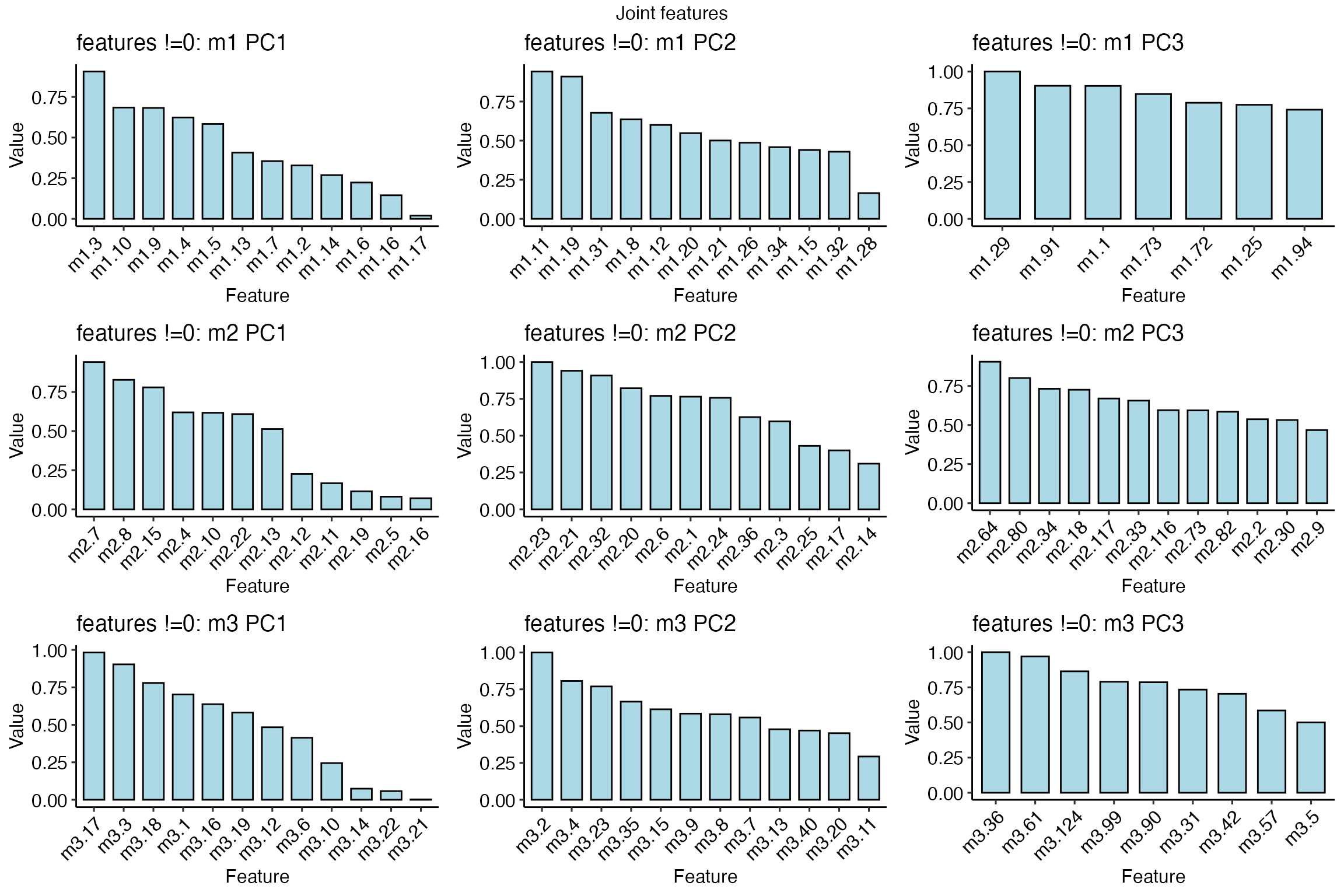

#> F-statistic: 8.784 on 3 and 96 DF, p-value: 3.326e-05Sparse Feature Selection

SiMLR incorporates sparse feature selection, allowing users to identify a minimal subset of features that contribute most to the joint embedding space. This can be particularly advantageous in settings where interpretability is crucial, such as biomarker discovery in biological datasets.

pp=plot_features( simres2 )

grid.arrange( grobs=pp, nrow=3, top='Joint features' )

Improved Model Interpretability

By projecting the data into a joint space, SiMLR helps improve the interpretability of machine learning models. The sparse feature selection ensures that only the most informative features are retained, making it easier to understand the relationships between the input data and the model’s predictions.

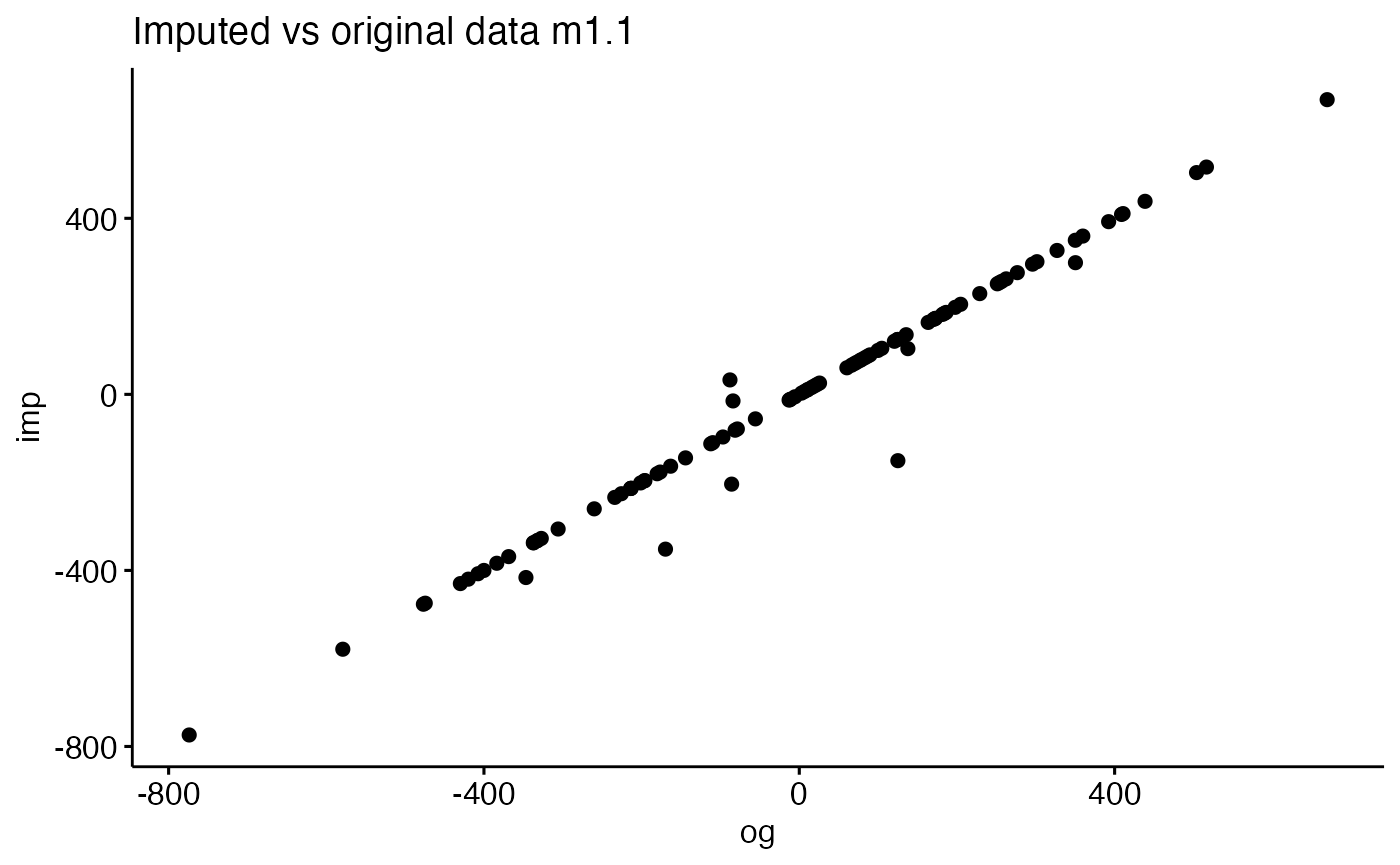

Cross-Modal Prediction or Imputation

In scenarios where you have multiple data modalities but missing information scattered across some of the views, SiMLR can be used to impute the missing data by leveraging the relationships between the available modalities. This cross-modal prediction capability is particularly useful in multi-omics studies and other fields where complete data is often unavailable.

popdfi=popdf

proportion <- 0.25

num_NAs <- ceiling(proportion * nrow(popdfi) * ncol(popdfi))

indices <- arrayInd(sample(1:(nrow(popdfi) * ncol(popdfi)), num_NAs), .dim = dim(popdfi))

popdfi[indices] <- NA

nms=names(simres2)

nsimx=3

sep='.'

imputedcols=c()

for ( n in nms ) {

for ( v in 1:nsimx ) {

thiscol=paste0(n,sep,v)

if ( any( is.na( popdfi[,thiscol] ) )) {

imputedcols=c(imputedcols,thiscol)

popdfi = simlr_impute( popdfi, nms, v, n, separator=sep )

}

}

}

impresult=data.frame( og=popdf[,imputedcols[1]], imp=popdfi[,imputedcols[1]])

ggscatter( impresult, 'og', 'imp' ) + ggtitle(paste("Imputed vs original data",imputedcols[1]))

#> Warning: Removed 5 rows containing missing values or values outside the scale range

#> (`geom_point()`).

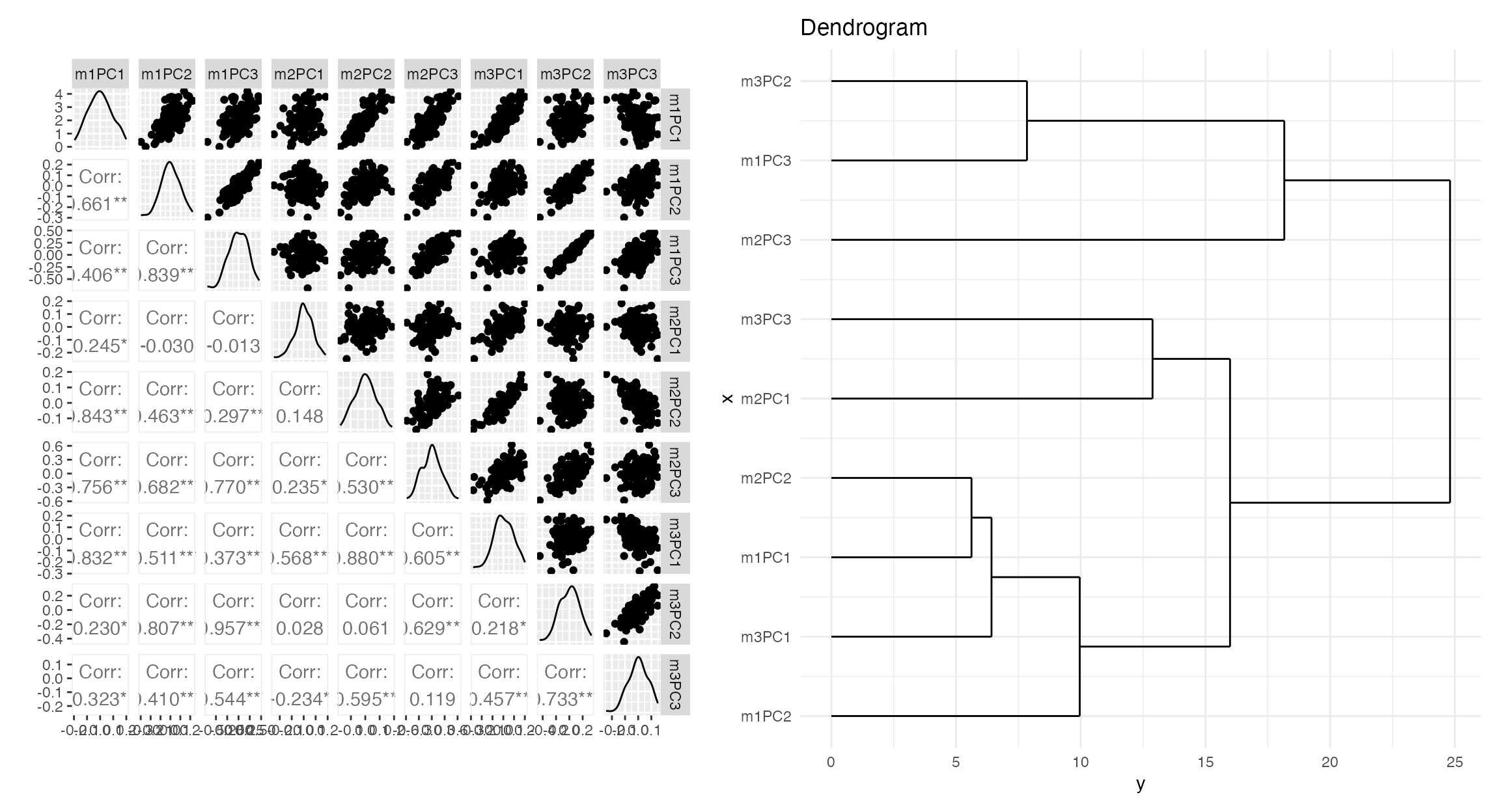

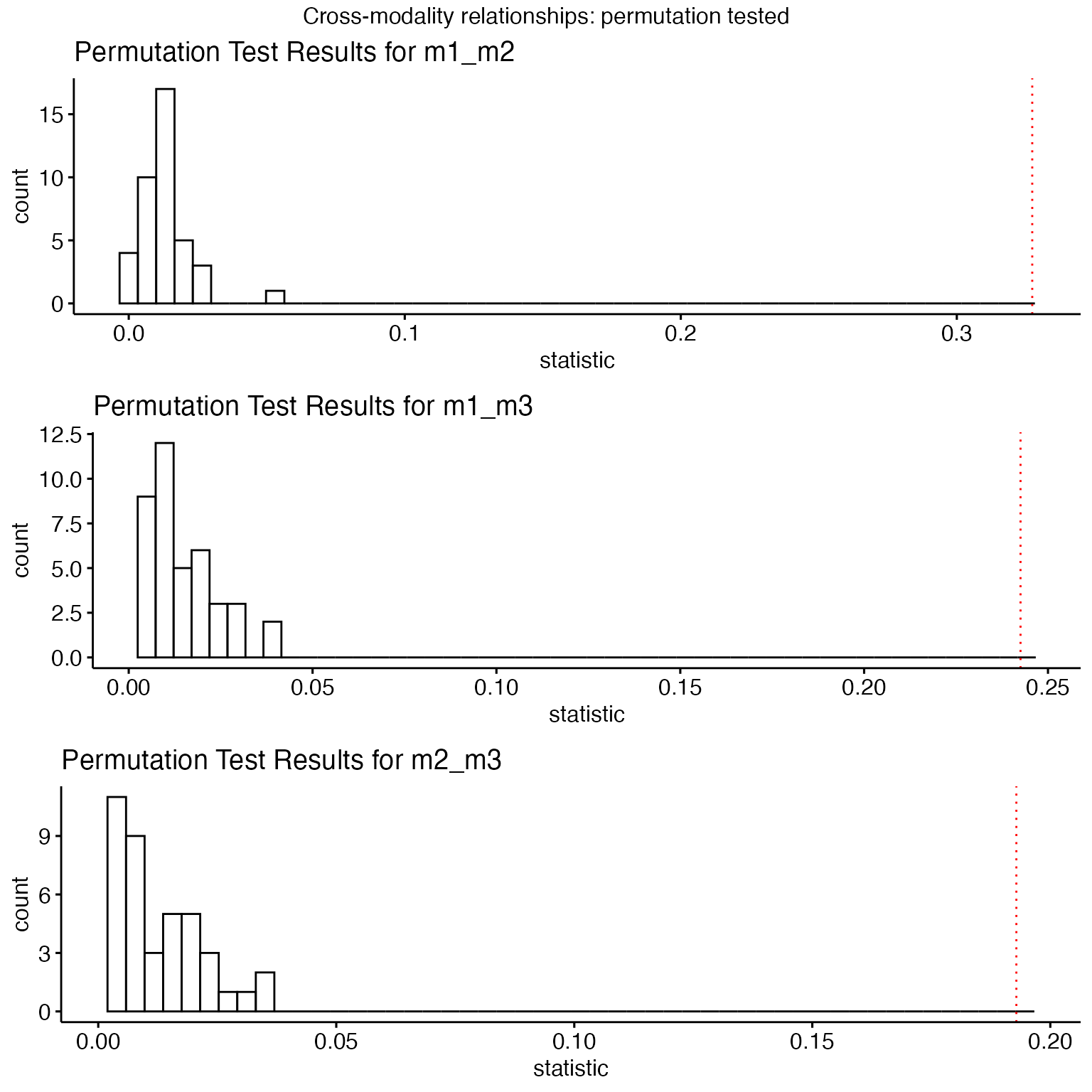

Testing Significance of Joint Relationships

We use a metric (rvcoef) that is not directly optimized

to assess the significance of the simlr result versus permuted data. The

RV coefficient is analogous to the Pearson correlation coefficient but

is used for comparing two matrices (or datasets) rather than two

variables. It measures the similarity of the column spaces (subspaces)

spanned by the columns of two matrices. We compute the

rvcoef across all pairs of data at each permutation and

compare to the original values. Another alternative

(diagcorr) is also possible.

diagcorr = function( X,Y ) mean(abs(diag(cor(X,Y))))

initu = initializeSimlr( matlist, 3, jointReduction = TRUE )

np=40

myperm = simlr.perm( matlist,

energyType='regression',

constraint=constr,scale=prepro,

initialUMatrix=initu,

iterations=500,

nperms=np, FUN=adjusted_rvcoef, verbose=TRUE )

#>

#> --- Method Summary ---

#> • Mixer Algorithm : svd

#> • Energy Type : regression

#> • Sparseness Alg. : NA

#> • expBeta : 0

#> • constraint : ortho

#> • constraint-it : 1

#> • constraint-wt : 0.02

#> • optimizationStyle: adam

#> • domain-knowledge : 0

#> ----------------------

#>

#> Setting adaptive orthogonality/energy weights based on first few iterations...

#> [1] "Setting adaptive orthogonality/energy weights based on first few iterations..."

#> Domain Weights: 1, 1, 1

#> View1 View2 View3

#> 3.119373 7.110481 4.533551

#> Norm Weights: 3.119, 7.11, 4.534

#> Orth Weights: 0, 0, 0

#> It: 2 | Energy: 0.3333 | Best.Energy: 0.3333 (at iter 2) | Ortho: 0.0000

#> ~~Convergence criteria met @ 53

#> No improvement over 10% of max iterations 50

#> --Did not converge after 53 iterations.

#>

#> --- Method Summary ---

#> • Mixer Algorithm : svd

#> • Energy Type : regression

#> • Sparseness Alg. : NA

#> • expBeta : 0

#> • constraint : ortho

#> • constraint-it : 1

#> • constraint-wt : 0.02

#> • optimizationStyle: adam

#> • domain-knowledge : 0

#> ----------------------

#>

#> Setting adaptive orthogonality/energy weights based on first few iterations...

#> [1] "Setting adaptive orthogonality/energy weights based on first few iterations..."

#> Domain Weights: 1, 1, 1

#> View1 View2 View3

#> 3.605873 6.779412 4.728773

#> Norm Weights: 3.606, 6.779, 4.729

#> Orth Weights: 0, 0, 0

#> It: 2 | Energy: 0.3338 | Best.Energy: 0.3338 (at iter 2) | Ortho: 0.0000

#> ~~Convergence criteria met @ 53

#> No improvement over 10% of max iterations 50

#> --Did not converge after 53 iterations.

#>

#> --- Method Summary ---

#> • Mixer Algorithm : svd

#> • Energy Type : regression

#> • Sparseness Alg. : NA

#> • expBeta : 0

#> • constraint : ortho

#> • constraint-it : 1

#> • constraint-wt : 0.02

#> • optimizationStyle: adam

#> • domain-knowledge : 0

#> ----------------------

#>

#> Setting adaptive orthogonality/energy weights based on first few iterations...

#> [1] "Setting adaptive orthogonality/energy weights based on first few iterations..."

#> Domain Weights: 1, 1, 1

#> View1 View2 View3

#> 3.353512 7.632748 4.766009

#> Norm Weights: 3.354, 7.633, 4.766

#> Orth Weights: 0, 0, 0

#> It: 2 | Energy: 0.3338 | Best.Energy: 0.3338 (at iter 2) | Ortho: 0.0000

#> ~~Convergence criteria met @ 53

#> No improvement over 10% of max iterations 50

#> --Did not converge after 53 iterations.

#>

#> --- Method Summary ---

#> • Mixer Algorithm : svd

#> • Energy Type : regression

#> • Sparseness Alg. : NA

#> • expBeta : 0

#> • constraint : ortho

#> • constraint-it : 1

#> • constraint-wt : 0.02

#> • optimizationStyle: adam

#> • domain-knowledge : 0

#> ----------------------

#>

#> Setting adaptive orthogonality/energy weights based on first few iterations...

#> [1] "Setting adaptive orthogonality/energy weights based on first few iterations..."

#> Domain Weights: 1, 1, 1

#> View1 View2 View3

#> 3.410867 6.940708 4.881424

#> Norm Weights: 3.411, 6.941, 4.881

#> Orth Weights: 0, 0, 0

#> It: 2 | Energy: 0.3334 | Best.Energy: 0.3334 (at iter 2) | Ortho: 0.0000

#> ~~Convergence criteria met @ 53

#> No improvement over 10% of max iterations 50

#> --Did not converge after 53 iterations.

#>

#> --- Method Summary ---

#> • Mixer Algorithm : svd

#> • Energy Type : regression

#> • Sparseness Alg. : NA

#> • expBeta : 0

#> • constraint : ortho

#> • constraint-it : 1

#> • constraint-wt : 0.02

#> • optimizationStyle: adam

#> • domain-knowledge : 0

#> ----------------------

#>

#> Setting adaptive orthogonality/energy weights based on first few iterations...

#> [1] "Setting adaptive orthogonality/energy weights based on first few iterations..."

#> Domain Weights: 1, 1, 1

#> View1 View2 View3

#> 3.341475 7.113242 4.824803

#> Norm Weights: 3.341, 7.113, 4.825

#> Orth Weights: 0, 0, 0

#> It: 2 | Energy: 0.3335 | Best.Energy: 0.3335 (at iter 2) | Ortho: 0.0000

#> ~~Convergence criteria met @ 53

#> No improvement over 10% of max iterations 50

#> --Did not converge after 53 iterations.

#>

#> --- Method Summary ---

#> • Mixer Algorithm : svd

#> • Energy Type : regression

#> • Sparseness Alg. : NA

#> • expBeta : 0

#> • constraint : ortho

#> • constraint-it : 1

#> • constraint-wt : 0.02

#> • optimizationStyle: adam

#> • domain-knowledge : 0

#> ----------------------

#>

#> Setting adaptive orthogonality/energy weights based on first few iterations...

#> [1] "Setting adaptive orthogonality/energy weights based on first few iterations..."

#> Domain Weights: 1, 1, 1

#> View1 View2 View3

#> 3.380806 7.024959 4.455000

#> Norm Weights: 3.381, 7.025, 4.455

#> Orth Weights: 0, 0, 0

#> It: 2 | Energy: 0.3338 | Best.Energy: 0.3338 (at iter 2) | Ortho: 0.0000

#> ~~Convergence criteria met @ 53

#> No improvement over 10% of max iterations 50

#> --Did not converge after 53 iterations.

#>

#> --- Method Summary ---

#> • Mixer Algorithm : svd

#> • Energy Type : regression

#> • Sparseness Alg. : NA

#> • expBeta : 0

#> • constraint : ortho

#> • constraint-it : 1

#> • constraint-wt : 0.02

#> • optimizationStyle: adam

#> • domain-knowledge : 0

#> ----------------------

#>

#> Setting adaptive orthogonality/energy weights based on first few iterations...

#> [1] "Setting adaptive orthogonality/energy weights based on first few iterations..."

#> Domain Weights: 1, 1, 1

#> View1 View2 View3

#> 3.388814 6.750244 4.824568

#> Norm Weights: 3.389, 6.75, 4.825

#> Orth Weights: 0, 0, 0

#> It: 2 | Energy: 0.3334 | Best.Energy: 0.3334 (at iter 2) | Ortho: 0.0000

#> ~~Convergence criteria met @ 53

#> No improvement over 10% of max iterations 50

#> --Did not converge after 53 iterations.

#>

#> --- Method Summary ---

#> • Mixer Algorithm : svd

#> • Energy Type : regression

#> • Sparseness Alg. : NA

#> • expBeta : 0

#> • constraint : ortho

#> • constraint-it : 1

#> • constraint-wt : 0.02

#> • optimizationStyle: adam

#> • domain-knowledge : 0

#> ----------------------

#>

#> Setting adaptive orthogonality/energy weights based on first few iterations...

#> [1] "Setting adaptive orthogonality/energy weights based on first few iterations..."

#> Domain Weights: 1, 1, 1

#> View1 View2 View3

#> 3.410218 7.498827 4.221164

#> Norm Weights: 3.41, 7.499, 4.221

#> Orth Weights: 0, 0, 0

#> It: 2 | Energy: 0.3334 | Best.Energy: 0.3334 (at iter 2) | Ortho: 0.0000

#> ~~Convergence criteria met @ 53

#> No improvement over 10% of max iterations 50

#> --Did not converge after 53 iterations.

#>

#> --- Method Summary ---

#> • Mixer Algorithm : svd

#> • Energy Type : regression

#> • Sparseness Alg. : NA

#> • expBeta : 0

#> • constraint : ortho

#> • constraint-it : 1

#> • constraint-wt : 0.02

#> • optimizationStyle: adam

#> • domain-knowledge : 0

#> ----------------------

#>

#> Setting adaptive orthogonality/energy weights based on first few iterations...

#> [1] "Setting adaptive orthogonality/energy weights based on first few iterations..."

#> Domain Weights: 1, 1, 1

#> View1 View2 View3

#> 3.309547 7.008600 4.136867

#> Norm Weights: 3.31, 7.009, 4.137

#> Orth Weights: 0, 0, 0

#> It: 2 | Energy: 0.3333 | Best.Energy: 0.3333 (at iter 2) | Ortho: 0.0000

#> ~~Convergence criteria met @ 53

#> No improvement over 10% of max iterations 50

#> --Did not converge after 53 iterations.

#>

#> --- Method Summary ---

#> • Mixer Algorithm : svd

#> • Energy Type : regression

#> • Sparseness Alg. : NA

#> • expBeta : 0

#> • constraint : ortho

#> • constraint-it : 1

#> • constraint-wt : 0.02

#> • optimizationStyle: adam

#> • domain-knowledge : 0

#> ----------------------

#>

#> Setting adaptive orthogonality/energy weights based on first few iterations...

#> [1] "Setting adaptive orthogonality/energy weights based on first few iterations..."

#> Domain Weights: 1, 1, 1

#> View1 View2 View3

#> 3.413913 6.734840 4.554724

#> Norm Weights: 3.414, 6.735, 4.555

#> Orth Weights: 0, 0, 0

#> It: 2 | Energy: 0.3335 | Best.Energy: 0.3335 (at iter 2) | Ortho: 0.0000

#> ~~Convergence criteria met @ 53

#> No improvement over 10% of max iterations 50

#> --Did not converge after 53 iterations.

#>

#> --- Method Summary ---

#> • Mixer Algorithm : svd

#> • Energy Type : regression

#> • Sparseness Alg. : NA

#> • expBeta : 0

#> • constraint : ortho

#> • constraint-it : 1

#> • constraint-wt : 0.02

#> • optimizationStyle: adam

#> • domain-knowledge : 0

#> ----------------------

#>

#> Setting adaptive orthogonality/energy weights based on first few iterations...

#> [1] "Setting adaptive orthogonality/energy weights based on first few iterations..."

#> Domain Weights: 1, 1, 1

#> View1 View2 View3

#> 3.412406 6.629812 4.469787

#> Norm Weights: 3.412, 6.63, 4.47

#> Orth Weights: 0, 0, 0

#> It: 2 | Energy: 0.3334 | Best.Energy: 0.3334 (at iter 2) | Ortho: 0.0000

#> ~~Convergence criteria met @ 53

#> No improvement over 10% of max iterations 50

#> --Did not converge after 53 iterations.

#>

#> --- Method Summary ---

#> • Mixer Algorithm : svd

#> • Energy Type : regression

#> • Sparseness Alg. : NA

#> • expBeta : 0

#> • constraint : ortho

#> • constraint-it : 1

#> • constraint-wt : 0.02

#> • optimizationStyle: adam

#> • domain-knowledge : 0

#> ----------------------

#>

#> Setting adaptive orthogonality/energy weights based on first few iterations...

#> [1] "Setting adaptive orthogonality/energy weights based on first few iterations..."

#> Domain Weights: 1, 1, 1

#> View1 View2 View3

#> 3.454967 6.794718 4.806324

#> Norm Weights: 3.455, 6.795, 4.806

#> Orth Weights: 0, 0, 0

#> It: 2 | Energy: 0.3334 | Best.Energy: 0.3334 (at iter 2) | Ortho: 0.0000

#> ~~Convergence criteria met @ 53

#> No improvement over 10% of max iterations 50

#> --Did not converge after 53 iterations.

#>

#> --- Method Summary ---

#> • Mixer Algorithm : svd

#> • Energy Type : regression

#> • Sparseness Alg. : NA

#> • expBeta : 0

#> • constraint : ortho

#> • constraint-it : 1

#> • constraint-wt : 0.02

#> • optimizationStyle: adam

#> • domain-knowledge : 0

#> ----------------------

#>

#> Setting adaptive orthogonality/energy weights based on first few iterations...

#> [1] "Setting adaptive orthogonality/energy weights based on first few iterations..."

#> Domain Weights: 1, 1, 1

#> View1 View2 View3

#> 3.516603 7.188520 4.269432

#> Norm Weights: 3.517, 7.189, 4.269

#> Orth Weights: 0, 0, 0

#> It: 2 | Energy: 0.3337 | Best.Energy: 0.3337 (at iter 2) | Ortho: 0.0000

#> ~~Convergence criteria met @ 53

#> No improvement over 10% of max iterations 50

#> --Did not converge after 53 iterations.

#>

#> --- Method Summary ---

#> • Mixer Algorithm : svd

#> • Energy Type : regression

#> • Sparseness Alg. : NA

#> • expBeta : 0

#> • constraint : ortho

#> • constraint-it : 1

#> • constraint-wt : 0.02

#> • optimizationStyle: adam

#> • domain-knowledge : 0

#> ----------------------

#>

#> Setting adaptive orthogonality/energy weights based on first few iterations...

#> [1] "Setting adaptive orthogonality/energy weights based on first few iterations..."

#> Domain Weights: 1, 1, 1

#> View1 View2 View3

#> 3.795535 7.276929 4.988741

#> Norm Weights: 3.796, 7.277, 4.989

#> Orth Weights: 0, 0, 0

#> It: 2 | Energy: 0.3333 | Best.Energy: 0.3333 (at iter 2) | Ortho: 0.0000

#> ~~Convergence criteria met @ 53

#> No improvement over 10% of max iterations 50

#> --Did not converge after 53 iterations.

#>

#> --- Method Summary ---

#> • Mixer Algorithm : svd

#> • Energy Type : regression

#> • Sparseness Alg. : NA

#> • expBeta : 0

#> • constraint : ortho

#> • constraint-it : 1

#> • constraint-wt : 0.02

#> • optimizationStyle: adam

#> • domain-knowledge : 0

#> ----------------------

#>

#> Setting adaptive orthogonality/energy weights based on first few iterations...

#> [1] "Setting adaptive orthogonality/energy weights based on first few iterations..."

#> Domain Weights: 1, 1, 1

#> View1 View2 View3

#> 3.258633 7.093432 4.817820

#> Norm Weights: 3.259, 7.093, 4.818

#> Orth Weights: 0, 0, 0

#> It: 2 | Energy: 0.3336 | Best.Energy: 0.3336 (at iter 2) | Ortho: 0.0000

#> ~~Convergence criteria met @ 53

#> No improvement over 10% of max iterations 50

#> --Did not converge after 53 iterations.

#>

#> --- Method Summary ---

#> • Mixer Algorithm : svd

#> • Energy Type : regression

#> • Sparseness Alg. : NA

#> • expBeta : 0

#> • constraint : ortho

#> • constraint-it : 1

#> • constraint-wt : 0.02

#> • optimizationStyle: adam

#> • domain-knowledge : 0

#> ----------------------

#>

#> Setting adaptive orthogonality/energy weights based on first few iterations...

#> [1] "Setting adaptive orthogonality/energy weights based on first few iterations..."

#> Domain Weights: 1, 1, 1

#> View1 View2 View3

#> 3.538885 6.698644 4.981111

#> Norm Weights: 3.539, 6.699, 4.981

#> Orth Weights: 0, 0, 0

#> It: 2 | Energy: 0.3333 | Best.Energy: 0.3333 (at iter 2) | Ortho: 0.0000

#> ~~Convergence criteria met @ 53

#> No improvement over 10% of max iterations 50

#> --Did not converge after 53 iterations.

#>

#> --- Method Summary ---

#> • Mixer Algorithm : svd

#> • Energy Type : regression

#> • Sparseness Alg. : NA

#> • expBeta : 0

#> • constraint : ortho

#> • constraint-it : 1

#> • constraint-wt : 0.02

#> • optimizationStyle: adam

#> • domain-knowledge : 0

#> ----------------------

#>

#> Setting adaptive orthogonality/energy weights based on first few iterations...

#> [1] "Setting adaptive orthogonality/energy weights based on first few iterations..."

#> Domain Weights: 1, 1, 1

#> View1 View2 View3

#> 3.474009 7.101863 4.404884

#> Norm Weights: 3.474, 7.102, 4.405

#> Orth Weights: 0, 0, 0

#> It: 2 | Energy: 0.3334 | Best.Energy: 0.3334 (at iter 2) | Ortho: 0.0000

#> ~~Convergence criteria met @ 53

#> No improvement over 10% of max iterations 50

#> --Did not converge after 53 iterations.

#>

#> --- Method Summary ---

#> • Mixer Algorithm : svd

#> • Energy Type : regression

#> • Sparseness Alg. : NA

#> • expBeta : 0

#> • constraint : ortho

#> • constraint-it : 1

#> • constraint-wt : 0.02

#> • optimizationStyle: adam

#> • domain-knowledge : 0

#> ----------------------

#>

#> Setting adaptive orthogonality/energy weights based on first few iterations...

#> [1] "Setting adaptive orthogonality/energy weights based on first few iterations..."

#> Domain Weights: 1, 1, 1

#> View1 View2 View3

#> 3.294782 7.213067 4.652329

#> Norm Weights: 3.295, 7.213, 4.652

#> Orth Weights: 0, 0, 0

#> It: 2 | Energy: 0.3333 | Best.Energy: 0.3333 (at iter 2) | Ortho: 0.0000

#> ~~Convergence criteria met @ 53

#> No improvement over 10% of max iterations 50

#> --Did not converge after 53 iterations.

#>

#> --- Method Summary ---

#> • Mixer Algorithm : svd

#> • Energy Type : regression

#> • Sparseness Alg. : NA

#> • expBeta : 0

#> • constraint : ortho

#> • constraint-it : 1

#> • constraint-wt : 0.02

#> • optimizationStyle: adam

#> • domain-knowledge : 0

#> ----------------------

#>

#> Setting adaptive orthogonality/energy weights based on first few iterations...

#> [1] "Setting adaptive orthogonality/energy weights based on first few iterations..."

#> Domain Weights: 1, 1, 1

#> View1 View2 View3

#> 3.310270 7.213983 4.204553

#> Norm Weights: 3.31, 7.214, 4.205

#> Orth Weights: 0, 0, 0

#> It: 2 | Energy: 0.3335 | Best.Energy: 0.3335 (at iter 2) | Ortho: 0.0000

#> ~~Convergence criteria met @ 53

#> No improvement over 10% of max iterations 50

#> --Did not converge after 53 iterations.

#>

#> --- Method Summary ---

#> • Mixer Algorithm : svd

#> • Energy Type : regression

#> • Sparseness Alg. : NA

#> • expBeta : 0

#> • constraint : ortho

#> • constraint-it : 1

#> • constraint-wt : 0.02

#> • optimizationStyle: adam

#> • domain-knowledge : 0

#> ----------------------

#>

#> Setting adaptive orthogonality/energy weights based on first few iterations...

#> [1] "Setting adaptive orthogonality/energy weights based on first few iterations..."

#> Domain Weights: 1, 1, 1

#> View1 View2 View3

#> 3.828284 6.933832 4.567220

#> Norm Weights: 3.828, 6.934, 4.567

#> Orth Weights: 0, 0, 0

#> It: 2 | Energy: 0.3341 | Best.Energy: 0.3341 (at iter 2) | Ortho: 0.0000

#> ~~Convergence criteria met @ 53

#> No improvement over 10% of max iterations 50

#> --Did not converge after 53 iterations.

#>

#> --- Method Summary ---

#> • Mixer Algorithm : svd

#> • Energy Type : regression

#> • Sparseness Alg. : NA

#> • expBeta : 0

#> • constraint : ortho

#> • constraint-it : 1

#> • constraint-wt : 0.02

#> • optimizationStyle: adam

#> • domain-knowledge : 0

#> ----------------------

#>

#> Setting adaptive orthogonality/energy weights based on first few iterations...

#> [1] "Setting adaptive orthogonality/energy weights based on first few iterations..."

#> Domain Weights: 1, 1, 1

#> View1 View2 View3

#> 3.286339 6.814044 4.566057

#> Norm Weights: 3.286, 6.814, 4.566

#> Orth Weights: 0, 0, 0

#> It: 2 | Energy: 0.3333 | Best.Energy: 0.3333 (at iter 2) | Ortho: 0.0000

#> ~~Convergence criteria met @ 53

#> No improvement over 10% of max iterations 50

#> --Did not converge after 53 iterations.

#>

#> --- Method Summary ---

#> • Mixer Algorithm : svd

#> • Energy Type : regression

#> • Sparseness Alg. : NA

#> • expBeta : 0

#> • constraint : ortho

#> • constraint-it : 1

#> • constraint-wt : 0.02

#> • optimizationStyle: adam

#> • domain-knowledge : 0

#> ----------------------

#>

#> Setting adaptive orthogonality/energy weights based on first few iterations...

#> [1] "Setting adaptive orthogonality/energy weights based on first few iterations..."

#> Domain Weights: 1, 1, 1

#> View1 View2 View3

#> 3.612617 7.127943 4.629671

#> Norm Weights: 3.613, 7.128, 4.63

#> Orth Weights: 0, 0, 0

#> It: 2 | Energy: 0.3333 | Best.Energy: 0.3333 (at iter 2) | Ortho: 0.0000

#> ~~Convergence criteria met @ 53

#> No improvement over 10% of max iterations 50

#> --Did not converge after 53 iterations.

#>

#> --- Method Summary ---

#> • Mixer Algorithm : svd

#> • Energy Type : regression

#> • Sparseness Alg. : NA

#> • expBeta : 0

#> • constraint : ortho

#> • constraint-it : 1

#> • constraint-wt : 0.02

#> • optimizationStyle: adam

#> • domain-knowledge : 0

#> ----------------------

#>

#> Setting adaptive orthogonality/energy weights based on first few iterations...

#> [1] "Setting adaptive orthogonality/energy weights based on first few iterations..."

#> Domain Weights: 1, 1, 1

#> View1 View2 View3

#> 3.796653 7.260534 4.189594

#> Norm Weights: 3.797, 7.261, 4.19

#> Orth Weights: 0, 0, 0

#> It: 2 | Energy: 0.3337 | Best.Energy: 0.3337 (at iter 2) | Ortho: 0.0000

#> ~~Convergence criteria met @ 53

#> No improvement over 10% of max iterations 50

#> --Did not converge after 53 iterations.

#>

#> --- Method Summary ---

#> • Mixer Algorithm : svd

#> • Energy Type : regression

#> • Sparseness Alg. : NA

#> • expBeta : 0

#> • constraint : ortho

#> • constraint-it : 1

#> • constraint-wt : 0.02

#> • optimizationStyle: adam

#> • domain-knowledge : 0

#> ----------------------

#>

#> Setting adaptive orthogonality/energy weights based on first few iterations...

#> [1] "Setting adaptive orthogonality/energy weights based on first few iterations..."

#> Domain Weights: 1, 1, 1

#> View1 View2 View3

#> 3.235175 7.772221 4.534052

#> Norm Weights: 3.235, 7.772, 4.534

#> Orth Weights: 0, 0, 0

#> It: 2 | Energy: 0.3335 | Best.Energy: 0.3335 (at iter 2) | Ortho: 0.0000

#> ~~Convergence criteria met @ 53

#> No improvement over 10% of max iterations 50

#> --Did not converge after 53 iterations.

#>

#> --- Method Summary ---

#> • Mixer Algorithm : svd

#> • Energy Type : regression

#> • Sparseness Alg. : NA

#> • expBeta : 0

#> • constraint : ortho

#> • constraint-it : 1

#> • constraint-wt : 0.02

#> • optimizationStyle: adam

#> • domain-knowledge : 0

#> ----------------------

#>

#> Setting adaptive orthogonality/energy weights based on first few iterations...

#> [1] "Setting adaptive orthogonality/energy weights based on first few iterations..."

#> Domain Weights: 1, 1, 1

#> View1 View2 View3

#> 3.245054 7.019586 4.219374

#> Norm Weights: 3.245, 7.02, 4.219

#> Orth Weights: 0, 0, 0

#> It: 2 | Energy: 0.3333 | Best.Energy: 0.3333 (at iter 2) | Ortho: 0.0000

#> ~~Convergence criteria met @ 53

#> No improvement over 10% of max iterations 50

#> --Did not converge after 53 iterations.

#>

#> --- Method Summary ---

#> • Mixer Algorithm : svd

#> • Energy Type : regression

#> • Sparseness Alg. : NA

#> • expBeta : 0

#> • constraint : ortho

#> • constraint-it : 1

#> • constraint-wt : 0.02

#> • optimizationStyle: adam

#> • domain-knowledge : 0

#> ----------------------

#>

#> Setting adaptive orthogonality/energy weights based on first few iterations...

#> [1] "Setting adaptive orthogonality/energy weights based on first few iterations..."

#> Domain Weights: 1, 1, 1

#> View1 View2 View3

#> 3.358093 7.260168 4.647634

#> Norm Weights: 3.358, 7.26, 4.648

#> Orth Weights: 0, 0, 0

#> It: 2 | Energy: 0.3333 | Best.Energy: 0.3333 (at iter 2) | Ortho: 0.0000

#> ~~Convergence criteria met @ 53

#> No improvement over 10% of max iterations 50

#> --Did not converge after 53 iterations.

#>

#> --- Method Summary ---

#> • Mixer Algorithm : svd

#> • Energy Type : regression

#> • Sparseness Alg. : NA

#> • expBeta : 0

#> • constraint : ortho

#> • constraint-it : 1

#> • constraint-wt : 0.02

#> • optimizationStyle: adam

#> • domain-knowledge : 0

#> ----------------------

#>

#> Setting adaptive orthogonality/energy weights based on first few iterations...

#> [1] "Setting adaptive orthogonality/energy weights based on first few iterations..."

#> Domain Weights: 1, 1, 1

#> View1 View2 View3

#> 3.142433 6.790014 4.688565

#> Norm Weights: 3.142, 6.79, 4.689

#> Orth Weights: 0, 0, 0

#> It: 2 | Energy: 0.3335 | Best.Energy: 0.3335 (at iter 2) | Ortho: 0.0000

#> ~~Convergence criteria met @ 53

#> No improvement over 10% of max iterations 50

#> --Did not converge after 53 iterations.

#>

#> --- Method Summary ---

#> • Mixer Algorithm : svd

#> • Energy Type : regression

#> • Sparseness Alg. : NA

#> • expBeta : 0

#> • constraint : ortho

#> • constraint-it : 1

#> • constraint-wt : 0.02

#> • optimizationStyle: adam

#> • domain-knowledge : 0

#> ----------------------

#>

#> Setting adaptive orthogonality/energy weights based on first few iterations...

#> [1] "Setting adaptive orthogonality/energy weights based on first few iterations..."

#> Domain Weights: 1, 1, 1

#> View1 View2 View3

#> 3.118724 7.227603 4.536603

#> Norm Weights: 3.119, 7.228, 4.537

#> Orth Weights: 0, 0, 0

#> It: 2 | Energy: 0.3335 | Best.Energy: 0.3335 (at iter 2) | Ortho: 0.0000

#> ~~Convergence criteria met @ 53

#> No improvement over 10% of max iterations 50

#> --Did not converge after 53 iterations.

#>

#> --- Method Summary ---

#> • Mixer Algorithm : svd

#> • Energy Type : regression

#> • Sparseness Alg. : NA

#> • expBeta : 0

#> • constraint : ortho

#> • constraint-it : 1

#> • constraint-wt : 0.02

#> • optimizationStyle: adam

#> • domain-knowledge : 0

#> ----------------------

#>

#> Setting adaptive orthogonality/energy weights based on first few iterations...

#> [1] "Setting adaptive orthogonality/energy weights based on first few iterations..."

#> Domain Weights: 1, 1, 1

#> View1 View2 View3

#> 3.567306 6.719840 4.518456

#> Norm Weights: 3.567, 6.72, 4.518

#> Orth Weights: 0, 0, 0

#> It: 2 | Energy: 0.3333 | Best.Energy: 0.3333 (at iter 2) | Ortho: 0.0000

#> ~~Convergence criteria met @ 53

#> No improvement over 10% of max iterations 50

#> --Did not converge after 53 iterations.

#>

#> --- Method Summary ---

#> • Mixer Algorithm : svd

#> • Energy Type : regression

#> • Sparseness Alg. : NA

#> • expBeta : 0

#> • constraint : ortho

#> • constraint-it : 1

#> • constraint-wt : 0.02

#> • optimizationStyle: adam

#> • domain-knowledge : 0

#> ----------------------

#>

#> Setting adaptive orthogonality/energy weights based on first few iterations...

#> [1] "Setting adaptive orthogonality/energy weights based on first few iterations..."

#> Domain Weights: 1, 1, 1

#> View1 View2 View3

#> 2.952451 6.593536 4.711115

#> Norm Weights: 2.952, 6.594, 4.711

#> Orth Weights: 0, 0, 0

#> It: 2 | Energy: 0.3335 | Best.Energy: 0.3335 (at iter 2) | Ortho: 0.0000

#> ~~Convergence criteria met @ 53

#> No improvement over 10% of max iterations 50

#> --Did not converge after 53 iterations.

#>

#> --- Method Summary ---

#> • Mixer Algorithm : svd

#> • Energy Type : regression

#> • Sparseness Alg. : NA

#> • expBeta : 0

#> • constraint : ortho

#> • constraint-it : 1

#> • constraint-wt : 0.02

#> • optimizationStyle: adam

#> • domain-knowledge : 0

#> ----------------------

#>

#> Setting adaptive orthogonality/energy weights based on first few iterations...

#> [1] "Setting adaptive orthogonality/energy weights based on first few iterations..."

#> Domain Weights: 1, 1, 1

#> View1 View2 View3

#> 3.484742 7.161973 4.377775

#> Norm Weights: 3.485, 7.162, 4.378

#> Orth Weights: 0, 0, 0

#> It: 2 | Energy: 0.3333 | Best.Energy: 0.3333 (at iter 2) | Ortho: 0.0000

#> ~~Convergence criteria met @ 53

#> No improvement over 10% of max iterations 50

#> --Did not converge after 53 iterations.

#>

#> --- Method Summary ---

#> • Mixer Algorithm : svd

#> • Energy Type : regression

#> • Sparseness Alg. : NA

#> • expBeta : 0

#> • constraint : ortho

#> • constraint-it : 1

#> • constraint-wt : 0.02

#> • optimizationStyle: adam

#> • domain-knowledge : 0

#> ----------------------

#>

#> Setting adaptive orthogonality/energy weights based on first few iterations...

#> [1] "Setting adaptive orthogonality/energy weights based on first few iterations..."

#> Domain Weights: 1, 1, 1

#> View1 View2 View3

#> 3.689624 6.922378 4.391847

#> Norm Weights: 3.69, 6.922, 4.392

#> Orth Weights: 0, 0, 0

#> It: 2 | Energy: 0.3334 | Best.Energy: 0.3334 (at iter 2) | Ortho: 0.0000

#> ~~Convergence criteria met @ 53

#> No improvement over 10% of max iterations 50

#> --Did not converge after 53 iterations.

#>

#> --- Method Summary ---

#> • Mixer Algorithm : svd

#> • Energy Type : regression

#> • Sparseness Alg. : NA

#> • expBeta : 0

#> • constraint : ortho

#> • constraint-it : 1

#> • constraint-wt : 0.02

#> • optimizationStyle: adam

#> • domain-knowledge : 0

#> ----------------------

#>

#> Setting adaptive orthogonality/energy weights based on first few iterations...

#> [1] "Setting adaptive orthogonality/energy weights based on first few iterations..."

#> Domain Weights: 1, 1, 1

#> View1 View2 View3

#> 3.281339 6.625239 4.658988

#> Norm Weights: 3.281, 6.625, 4.659

#> Orth Weights: 0, 0, 0

#> It: 2 | Energy: 0.3335 | Best.Energy: 0.3335 (at iter 2) | Ortho: 0.0000

#> ~~Convergence criteria met @ 53

#> No improvement over 10% of max iterations 50

#> --Did not converge after 53 iterations.

#>

#> --- Method Summary ---

#> • Mixer Algorithm : svd

#> • Energy Type : regression

#> • Sparseness Alg. : NA

#> • expBeta : 0

#> • constraint : ortho

#> • constraint-it : 1

#> • constraint-wt : 0.02

#> • optimizationStyle: adam

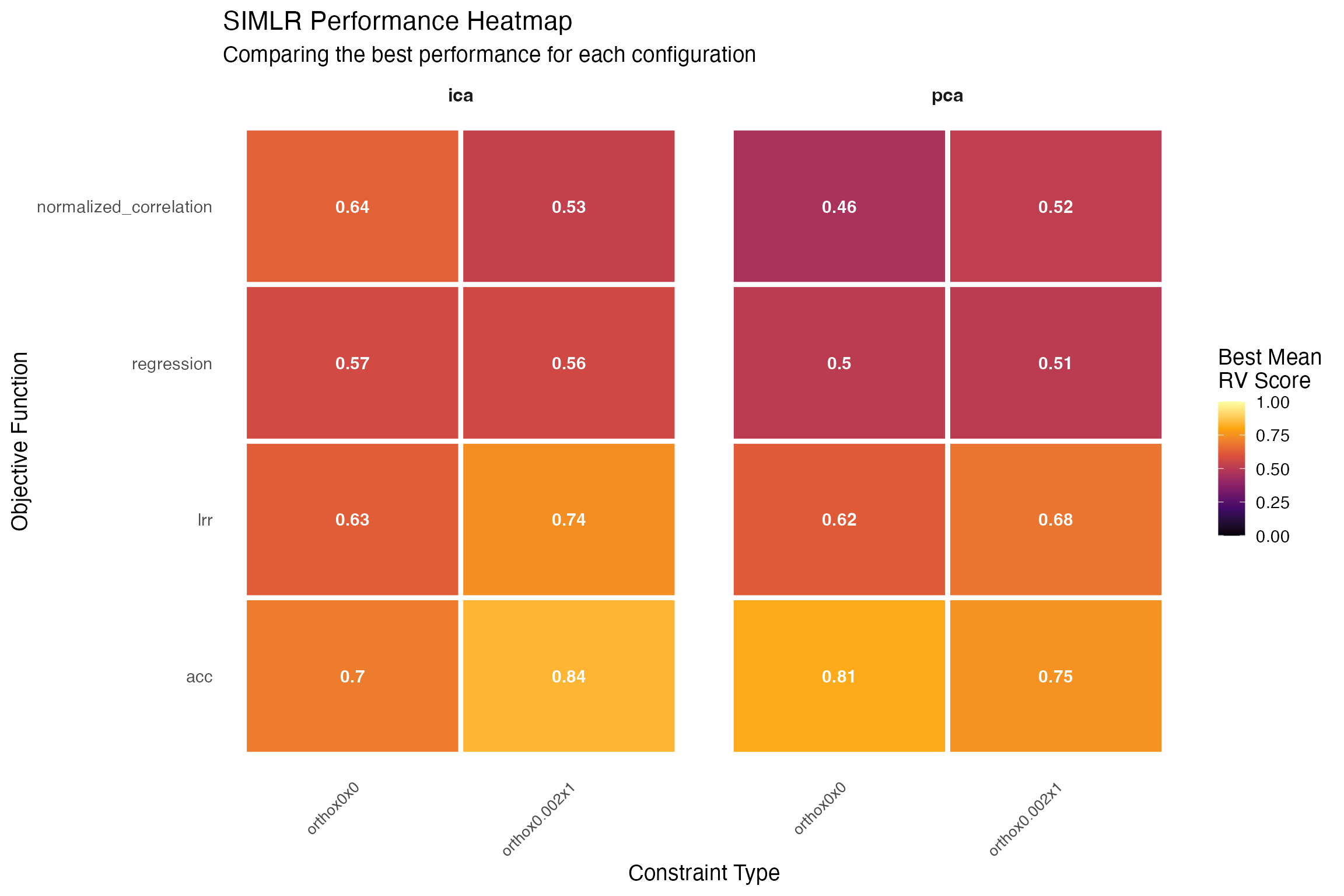

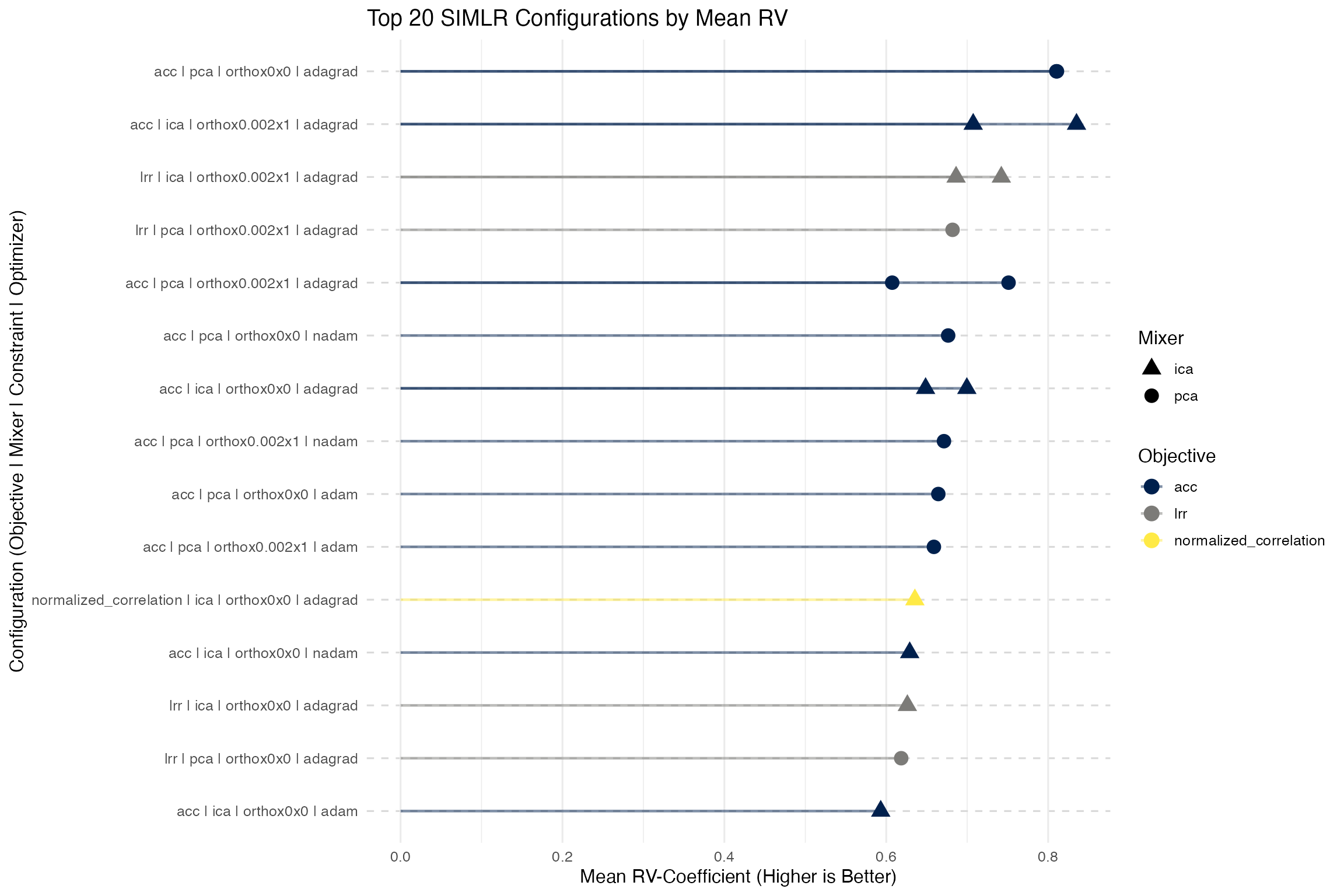

#> • domain-knowledge : 0